The beauty of adopting a Markovian approach to training.

Alan Couzens, M.Sc.(Sports Science)

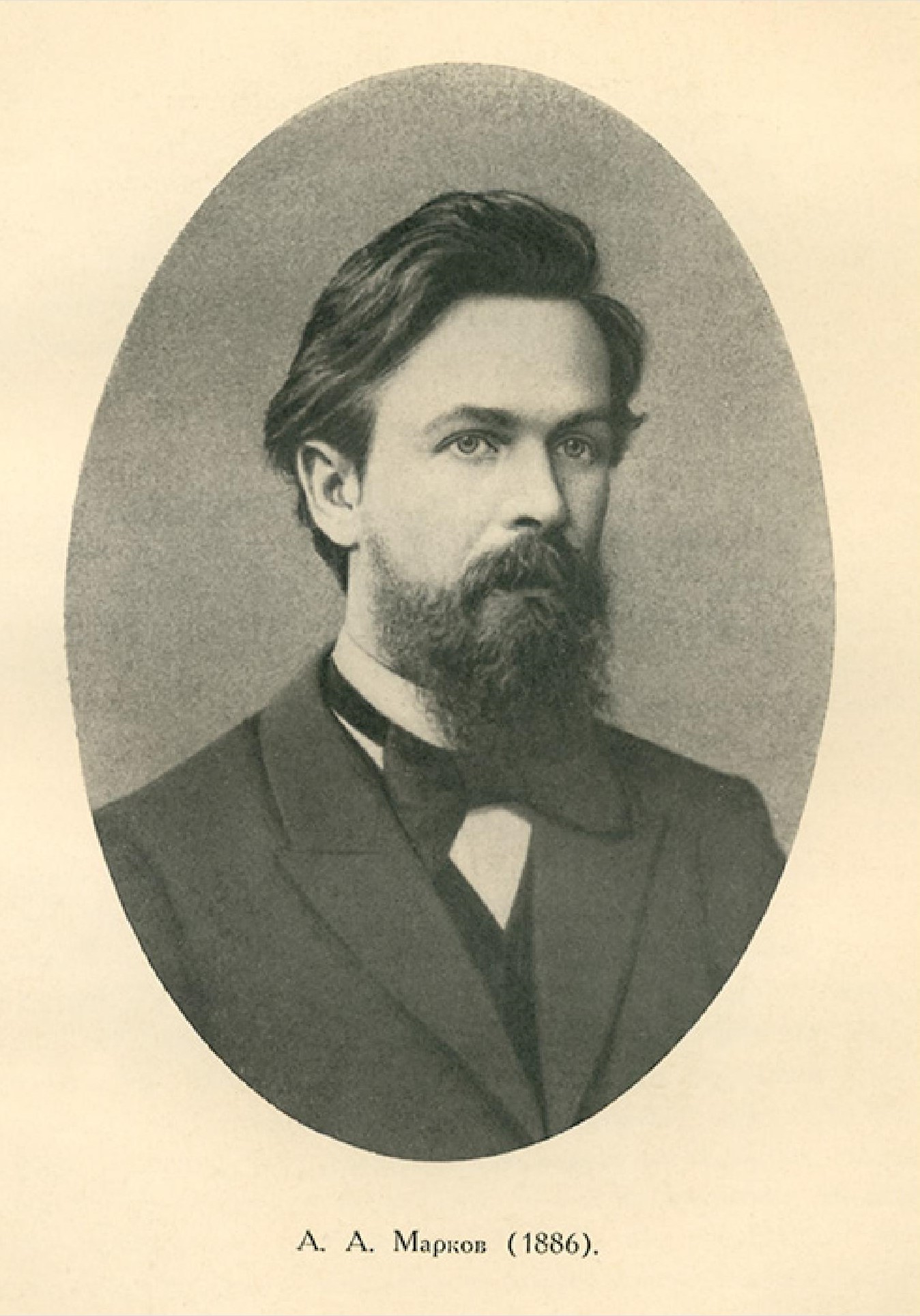

The guy pictured above, Andrey Markov, may be one of the greatest sport psychologists of our time. This is especially impressive considering that he never received any training in sport psychology or, as far as I know, ever conversed with an athlete for that matter :-) Let me explain...

One of the most discouraging sights, for both coach and athlete, when they log in to Training Peaks is a sea of red. As you probably know, the default Training Peaks setting highlights any workouts that weren't completed to plan with a big, 'in your face' red reminder that... well... you suck. At least, that is how most committed athletes interpret it.. as a big, glaring reminder that you failed to get it done. Worse still, it lingers. Every time you scroll back through your calendar or you look over your Annual Training Plan, you're reminded of where you're 'supposed to be' versus where you are. Despite the fact that many things, some within the athlete's control, some not within the athlete's control, can occur between when a training plan is drafted and when it is completed that affect whether the athlete is able to complete the program 'to plan', it doesn't change the fact that, when an athlete looks back over weeks or months that fell off the plan, the experience & the emotions are generally very negative. So negative, in fact, that they can have a direct impact on the athlete's motivation & in their belief in themself to "stick to a plan" moving forward. Sometimes, as a Coach, I wish I had access to the Star Trek memory eraser... :)

Markov, a 19th century mathematician, also had a real interest in memory of the past. More specifically, just how unnecessary it was in many systems. Markov is most famous for his work on Markov Chains - systems where the probability of any future state depends only on actions taken from the current state. An example of a Markov Chain is shown below.

If my current state is "eating cheese", there is a 50% chance that I will eat some Grapes and a 50% chance that I will eat some Lettuce. It doesn't matter how I found myself eating cheese, all that matters is the choice that I will make from this point. The choice that I make will determine the new state that I find myself in (eating Grapes or eating Lettuce) and the process continues from that point, finding myself in a new state with every ('good' or 'bad') action that I take.

I would argue that the process of athletic training falls very much under this definition of a Markovian Process in the sense that, the best training action that you can take today depends only on the state that you currently find yourself in. How you got here is immaterial - whether through rigid commitment to this point, through uncontrollable life events, through good luck, bad luck or laziness, it doesn't matter....

You are here & all that matters, in terms of where you go from here, are the actions that you decide to take TODAY, right now, from your current state.

The Markov property, this central trait of independence from the past, that defines these systems, has also been termed "Memorylessness". I would also argue that memorylessness can be a wonderful thing when it comes to longevity in sport! Nothing is more demoralizing than comparisons with where where you 'should have' been or, for that matter, where you have been in the past. Nothing is more empowering than taking the stance that it all begins from today, and, more than that, to take that stance every day from here forth!

Beyond theoretical constructs, Markov Chains are an important concept in many practical applications. One of these applications is in the subdivision of Machine Learning termed Reinforcement Learning. In systems that exhibit this Markov Property, Reinforcement Learning can be applied to determine the optimal action to take from any given state. The introduction of actions and rewards to the Markov Chain creates a Markov Decision Process, where the choices that I make within the environment affect (but not necessarily completely determine) whether I move closer to of further away from my goal. By observing this link between the actions I take and whether they move me closer or further away from my goal, over time the system learns what 'good' actions are with respect to any goal.

In the example given above, if my objective function was to get fat, the optimal action would be to always find myself eating cheese. Therefore, I could apply reinforcement learning to discover a policy that maximizes my chance of eating cheese, i.e. avoid eating grapes because there is only a 40% chance I'll find myself eating cheese from that state, but instead to swing between eating lettuce and eating cheese.

Similarly, in our context of athletic training, if I had actions of resting, doing a hard interval session & doing a long aerobic session & my reward function was to maximize fitness, I could select the sequence of actions that will accomplish that objective. That is the precise approach that I am currently taking as we apply reinforcement learning to the task of optimizing athletic performance.

Software that implements reinforcement learning to identify the optimal training session for an individual athlete from any given state will clearly be very powerful. However, the real power of Markov's legacy is far simpler - pay zero mind to the highs or lows of the past and consider only where you are... today, right now. Always keep in mind that the only thing that will ultimately determine your future in sport is taking Markov's lead, choosing "Memorylessness" & simply focusing on taking the best action for your given state (of both fitness and fatigue)...today, then the next, then the day after.

Train smart,

AC

TweetDon't miss a post! Sign up for my mailing list to get notified of all new content....